A team at U.C. Berkeley, lead by artificial intelligence (AI) expert Stuart Russell, created the new Center for Human-Compatible Artificial Intelligence, which focuses on ensuring AI systems will be beneficial to human beings. Russell is the co-author of “Artificial Intelligence: A Modern Approach”, which is regarded as the standard text in the AI field, and is an advocate for incorporating human values into AI design. The center was launched with a $5.5 million grant of from the Open Philanthropy Project, with additional grants from the Future of Life Institute and the Leverhulme Trust.

With regards to the imaginary threat from the evil robots of science fiction known as sentient, Russell quickly dismissed the issue, explaining that machines that are currently designed in fields, such as robotics, operations research, control theory and AI, will literally take objectives humans give them. Citing the Cat in the Hat robot, he said that domestic robots are told to do tasks without understanding the hierarchy of the values of the programmed tasks at hand.

The center aims to work on solutions to guarantee that most sophisticated AI systems, which might perform essential services for people or might be entrusted with control over critical infrastructure, will act in a way that is aligned with human values. Russell said, “AI systems must remain under human control, with suitable constraints on behavior, despite capabilities that may eventually exceed our own. This means we need cast-iron formal proofs, not just good intentions.”

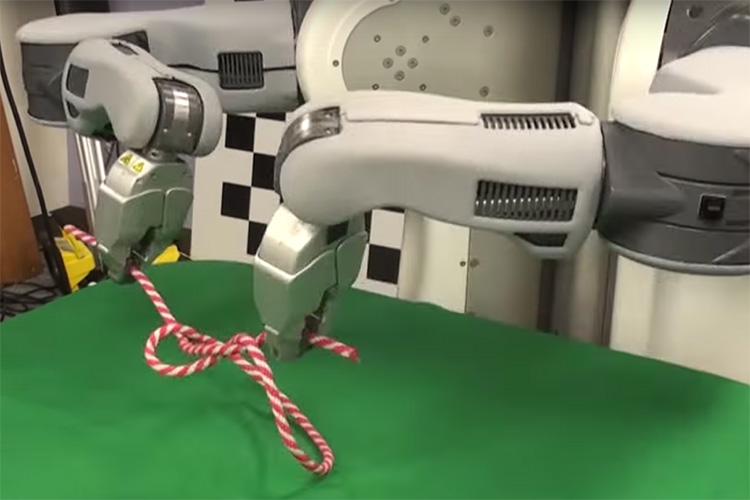

One solution that Russell and his team are exploring is the so-called “inverse reinforcement learning”, which allows robots to learn about human values through human behavior observations. By allowing them to observe how people behave in their daily lives, the robots would learn about the value of certain things in our lives. Explaining this, Russell states, “Rather than have robot designers specify the values, which would probably be a disaster, instead the robots will observe and learn from people. Not just by watching, but also by reading. Almost everything ever written down is about people doing things, and other people having opinions about it. All of that is useful evidence.”

However, Russell acknowledged that this will not be an easy task, considering that people are highly varied in terms of values, which will be far from perfect in putting them into practice. He said that this aspect can cause problems for robots that are trying to exactly learn what people want, as there will be conflicting desires among different individuals. Summing things up in his article, Russell said, “In the process of figuring out what values robots should optimize, we are making explicit the idealization of ourselves as humans. As we envision AI aligned with human values, that process might cause us to think more about how we ourselves really should behave, and we might learn that we have more in common with people of other cultures than we think.”

Among the principal investigators who are helping Russell for the new center include cognitive scientist Tom Griffiths and computer scientist Pieter Abbeel.

Reference

https://news.berkeley.edu/2016/08/29/center-for-human-compatible-artificial-intelligence/

Sorry, the comment form is closed at this time.